Interactive demonstrations for ML courses

Machine learning becomes more and more popular, and there are now many demonstrations available over the internet which help to demonstrate some ideas about algorithms in a more vivid way. (many of those are java-applets which are not so easy to use).

There are certain arguments against having courses overloaded with interactive things since those frequently prevent students from diving into mathematics beyond machine learning, but generally I consider demonstrations helpful.

Here are some demos which I find useful:

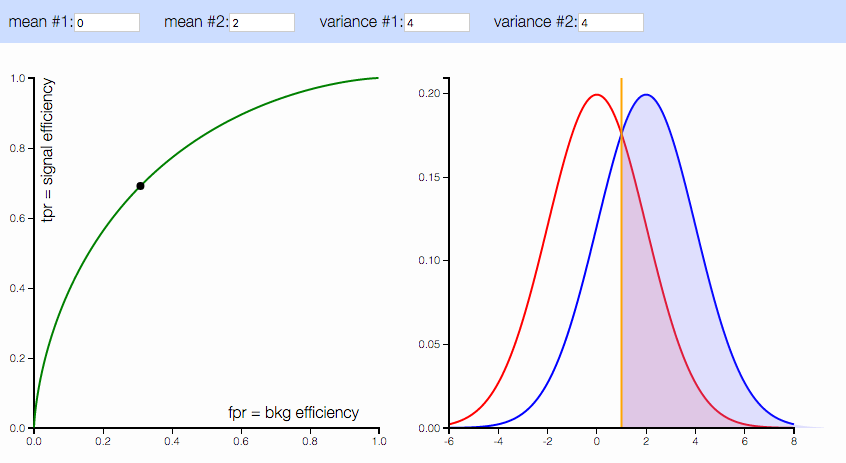

Demo of ROC curve

ROC curve is fairly simple subject, but having a demo is nice way to demonstrate some important limit cases. I have prepared simple html demo for this.

There is mini-version and a detailed post.

Demos by Andrej Karpathy

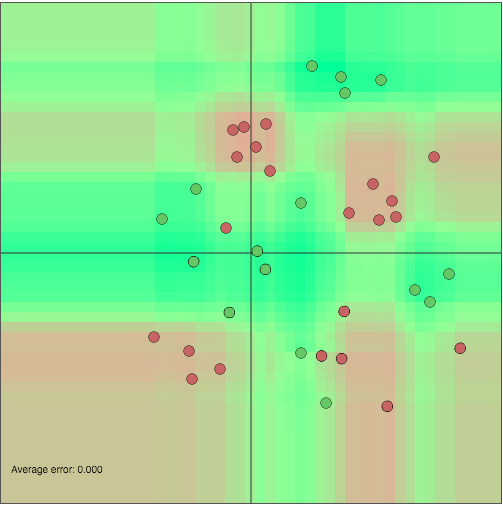

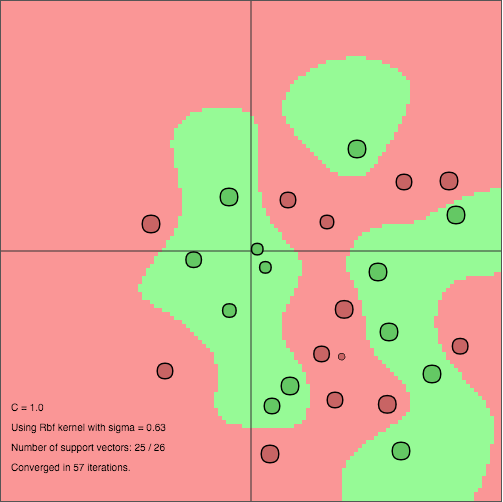

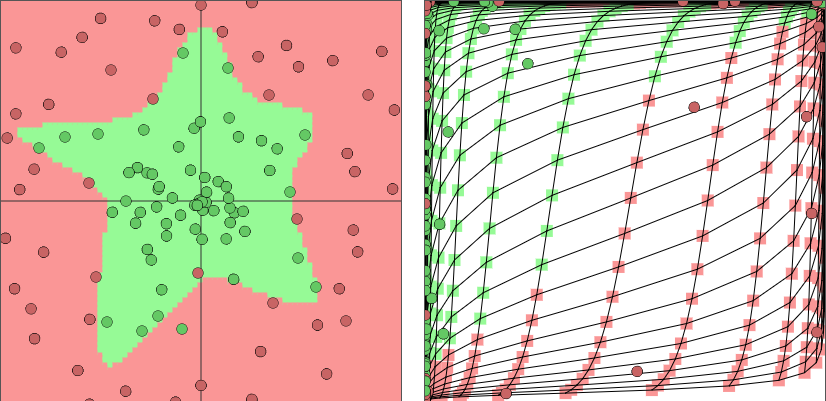

Andrej Karpathy prepared a bulk of demos with the same interface for different classification algorithms:

|

|

|

| RandomForest | SVM | Neural network |

There are also other interesting demonstrations like t-SNE and RNNs, you’re welcome to check Andrej’s github page.

Also I highly recommend looking at other convnet.js demos.

Decision tree

I didn’t find a good interactive playground for a single decision tree. While one can use random forest with a single tree, it doesn’t help much to understand splitting criterion.

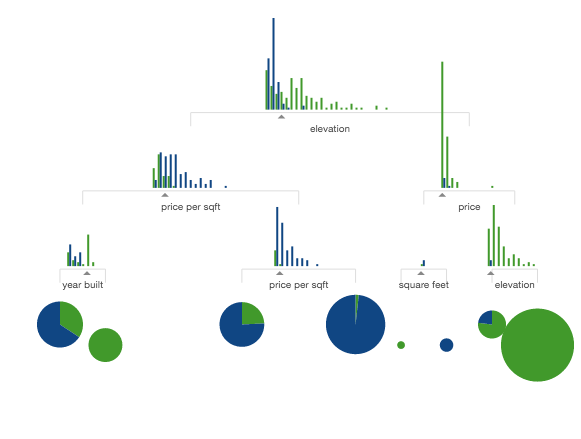

However, there is a nice intro into data analysis, which demonstrates the process of building a tree. It’s not super-useful during classes, but can be given as an additional material.

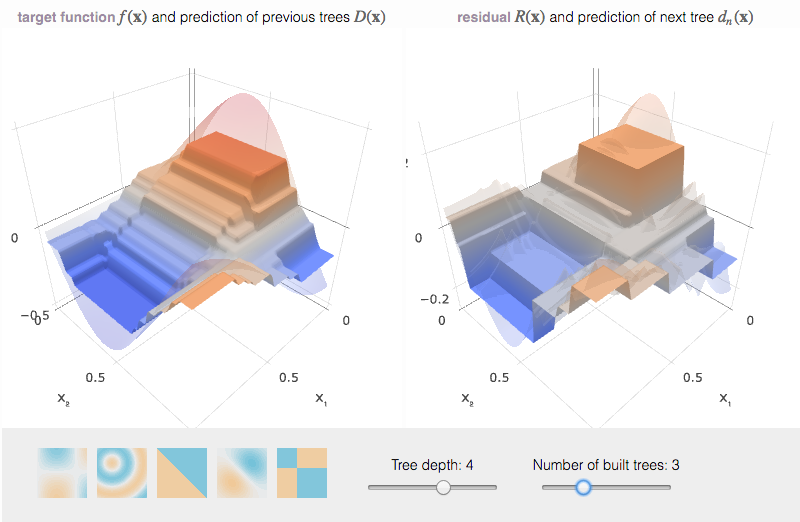

Gradient Boosting (and decision tree for regression)

An explanatory post about how gradient boosting works.

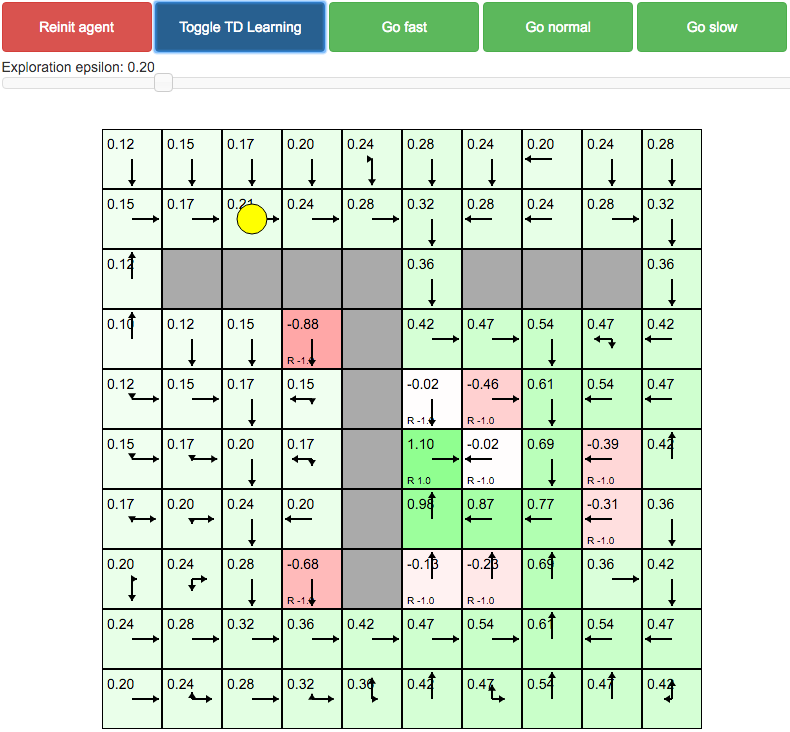

Mini-course on reinforcement learning

There is also a very nice mini-course of reinforcement learning in the format of set of interactive demonstrations, which I highly recommend as a separate reading, but not part of other course.

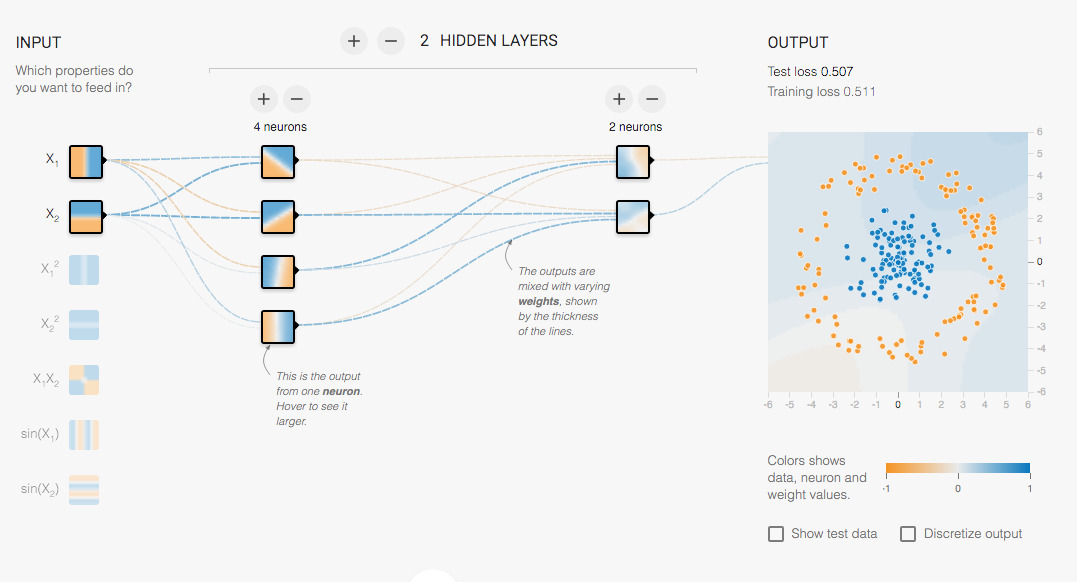

Neural network demo by TensorFlow team

What was really missing in the Karpathy’s presentation about neural networks is demonstration of activations of inner neurons, and while I was planning when I can contribute this feature, tensorflow team published an awesome demo, which already has this and also provides more different knobs to play with.

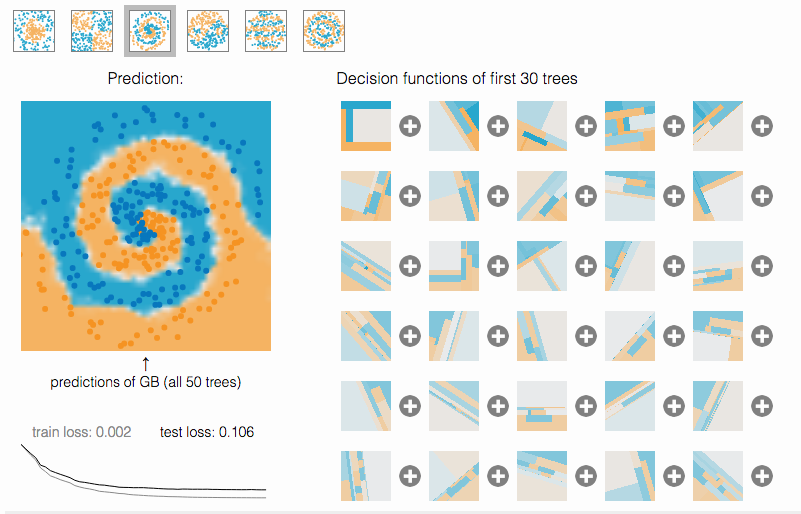

Gradient boosting demo for classification

You can play with gradient boosting online too with my demonstration:

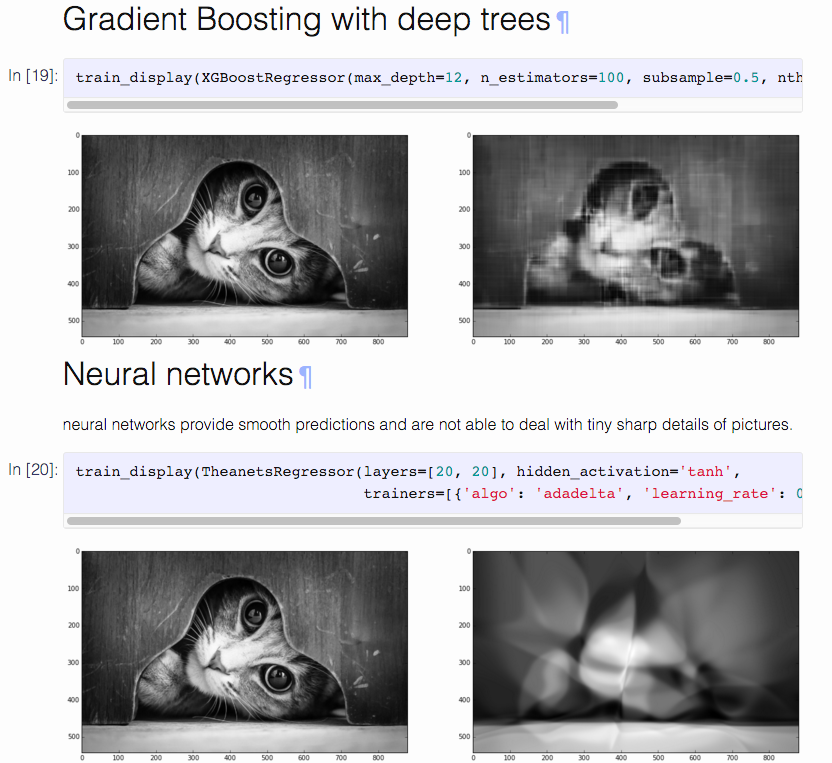

Reconstructing an image with different ML techniques

Formally, this is not an interactive demonstration, since you can’t play with it online. But I show it in the end of the course as a fast and pleasant way to revisit the whole course.

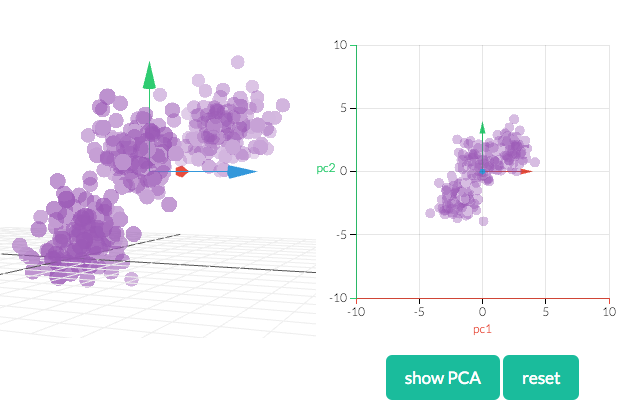

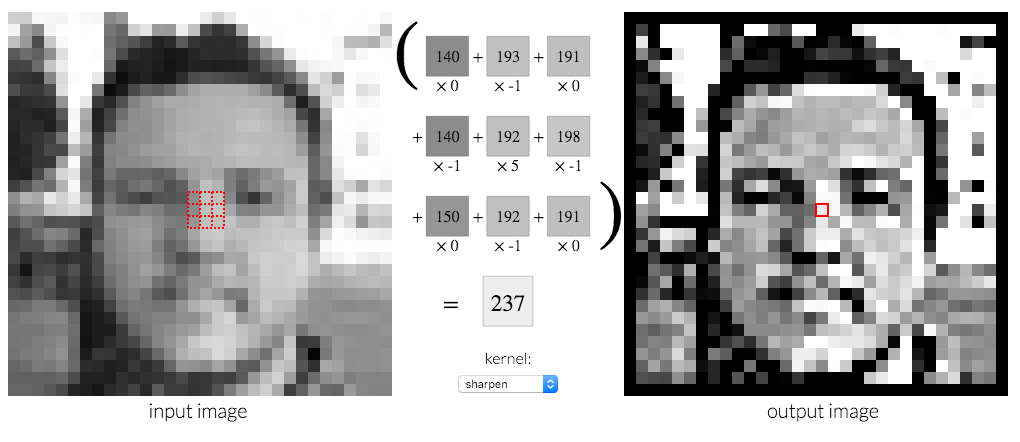

Demonstrations by Victor Powell

There is a nice mini-project “Explained Visually” by Victor Powell with demonstrations of mathematical things.

While most of those are about things too simple (like eigenvectors, sin, ordinary least squares), there are also some more useful visualizations.

|

|

|

| PCA | Convolution (image kernels) | Markov Chains |

Dimensionality reduction

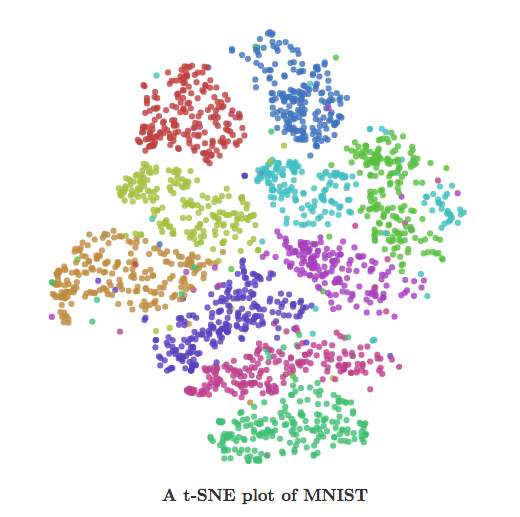

A post by Christopher Olah visualizes different dimensionality reduction algorithms using MNIST dataset.

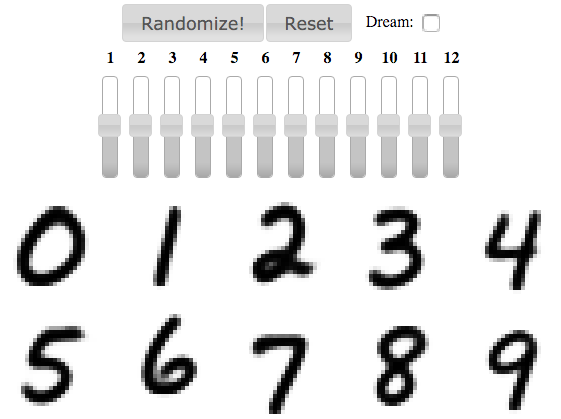

Variational AutoEncoder

A demonstration by Durk Kingma, where you can play with hidden parameters and let networks “dream” MNIST digits.

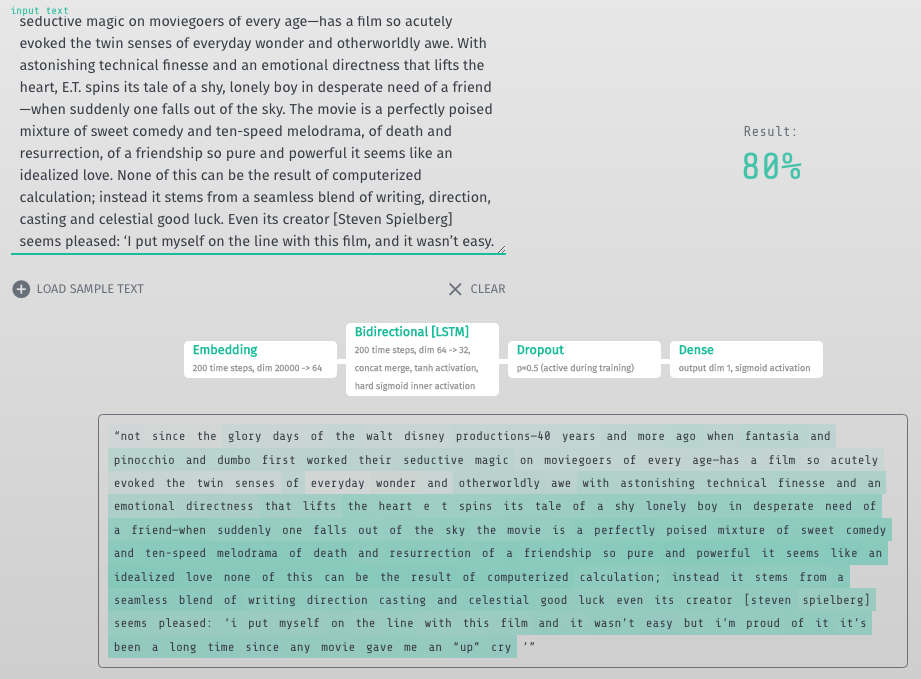

Keras JS demonstrations

Classical applications of neural networks right in the browser. Demonstration has very handy interface and also can use GPU right from the browser.

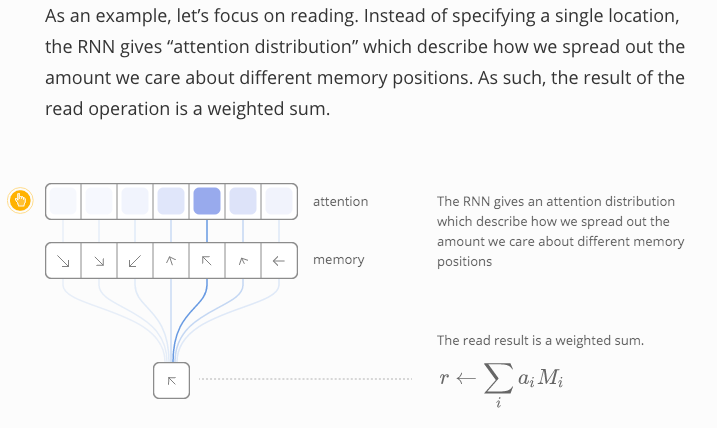

Attention and memory in neural networks

are explained in this post by Google Brain team

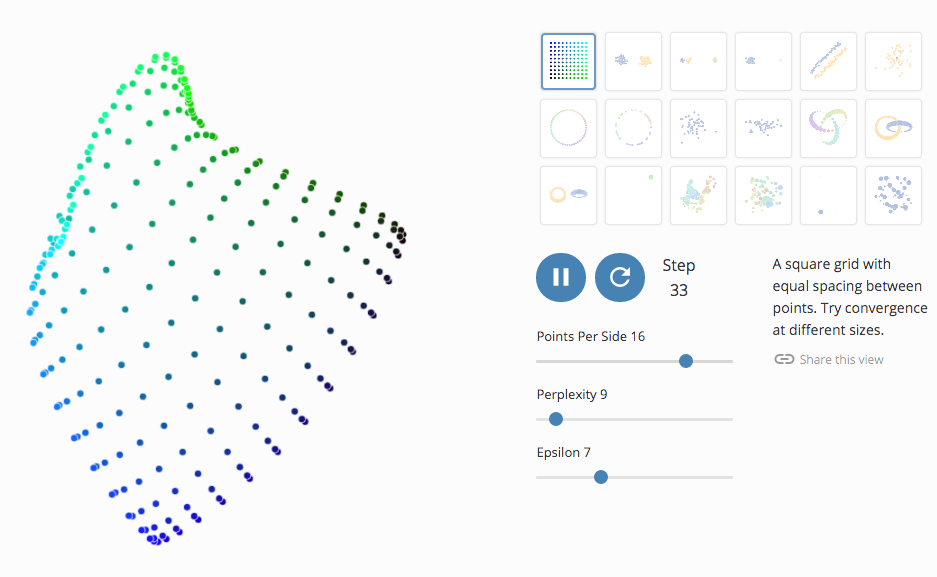

Misread tSNE

Another long-read from Google Brain team is devoted to understanding t-SNE and its output

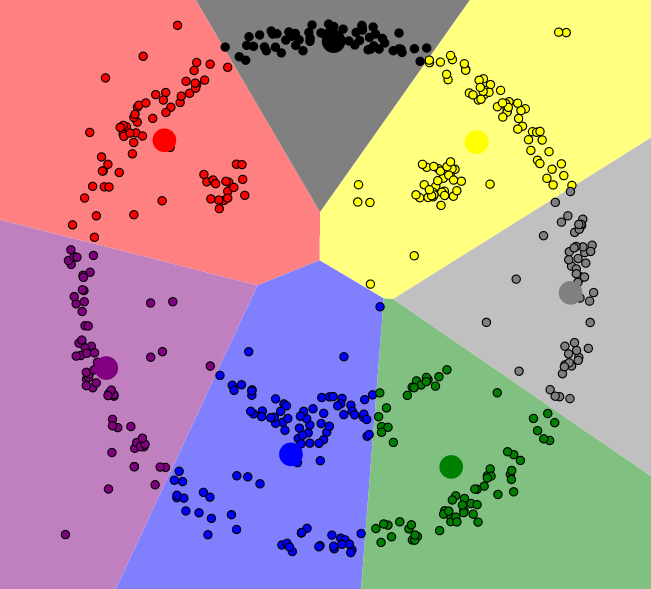

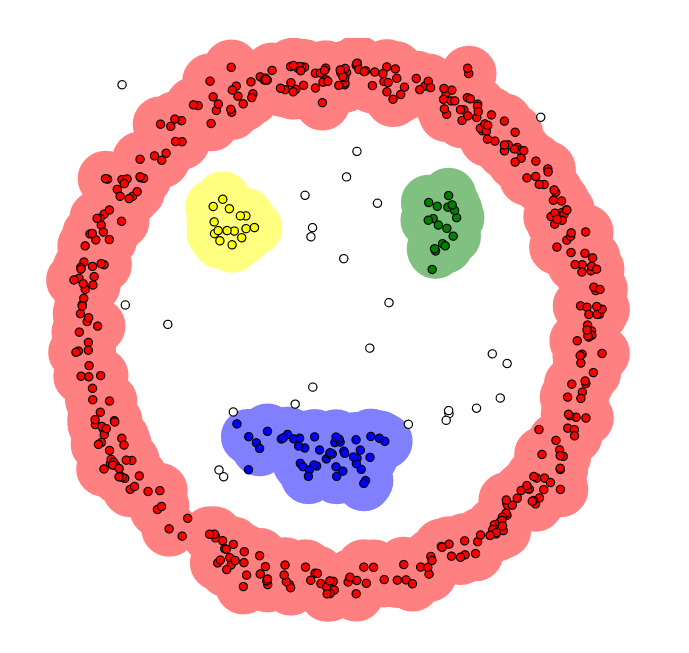

Clustering

A couple of posts about k-means clustering and DBSCAN clustering were written by Naftali Harris.

You can track the process of clustering and choose different toy data examples.

|

|

| k-means | DBSCAN clustering |

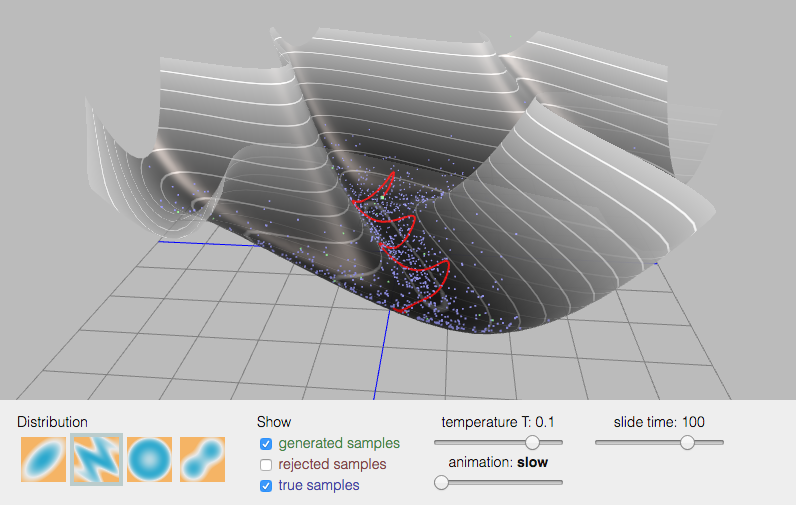

Markov chain Monte Carlo

Monte Carlo algorithms play an important role in many scientific applications. In bayesian machine learning MCMC is the way to model complex posterior distributions, and there is a demonstration of MCMC algorithms

Convolutional arithmetic

Animated visualizations of different versions of convolutions applied in practice.

Missing things

Demonstrations I haven’t found so far (but It would be nice to have those):

-

nice standalone demonstration of decision trees -

gradient boosting - Expectation-Maximization algorithm for fitting mixtures

- Viterbi algorithm

- RBMs dropped out of the fashion, but it would be nice e.g. to demonstrate contrastive divergence.

There are many things connected to RNNs / text / word embeddings, but I don’t mention those here.

Gradient boosting

Gradient boosting  Hamiltonian MC

Hamiltonian MC  Gradient boosting

Gradient boosting  Reconstructing pictures

Reconstructing pictures  Neural Networks

Neural Networks