Automatic reweighting with gradient boosting

See my later, extended post with many more details: reweighting with BDT

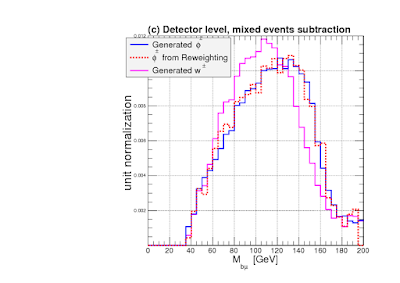

In high energy physics there is frequently used trick: reweighting of distribution. The most frequent reason to reweight data is to introduce some kind of consistency between real data and simulated one. The typical picture of how it looks like you can find below:

The aim is to find such weights for MC, that makes original distribution look like target distribution. I've found a way to use here gradient boosting over regression trees, though it is quite different from usual GBRT in loss, update rule and splitting criterion.

Update rule of reweighting GB

Here is the definition of weights:

$$w = \begin{cases}w, \text{event from target distribution} \\ e^{pred} w, \text{event from original distribution} \end{cases}, $$

so as you see, we are looking for multiplier $e^{pred}$, which will reweight the original distribution.

Pred is raw prediction of gradient boosting.

Splitting criterion of reweighting GB

The most obvious way to select splitting is to maximize binned $\chi^2$ statistics, so we are looking for the tree, which splits the space on the most 'informative' cells, where the difference is significant.

$$ \chi^2 = \sum_{\text{bins}} \dfrac{ (w_{target} - w_{original} )^2}{w_{target} + w_{original} } $$

Here I selected a bit more symmetric version, though this was not necessary.

Computing optimal value in the leaf

since we are going to remove difference in distributions, the optimal value is obviously:

$$ \text{leaf\_value} = \log{\dfrac{w_{target}}{ w_{original} }} $$

Gradient boosting

Gradient boosting  Hamiltonian MC

Hamiltonian MC  Gradient boosting

Gradient boosting  Reconstructing pictures

Reconstructing pictures  Neural Networks

Neural Networks